out because there are currently no transform function installed in the application.

out because there are currently no transform function installed in the application.This term was a huge term for project implementation. Not only have we accomplished a lot in the constructing of the project, but we have also made changes in the structure of the group that have done nothing but make our work better and more efficient. Throughout this term report we’ll look at the progress made in this term and also look forward toward the next term.

One of the first things we have done this term was to split the group up into smaller teams and distribute the smaller tasks of this project. There are four tasks that we have broken this project into. These four tasks are the interface, analysis, model, and vision. The interface is considered to be the design and implementation of a test application for our code, and also the final GUI. Joe is heading up the interface area. The analysis will deal with determining the feedback to present to the user. This section will actually critique the player’s actions. This section of the project has been put aside and will be accomplished in future terms. Thirdly there is a section of the project dealing with the model of the table we present to the user. This section consists of developing and implementing ball, table, and ball state objects and also creating and using a game descriptor. Keith is heading up the model area. Finally there is the vision section of this project. This section deals with edge detection and circle detection. This section also deals with the "brains" of the vision part; creating a driver that can handle the detection that our application will need. Carleton is heading this section up. These three topics (excluding analysis) will now be presented in greater detail.

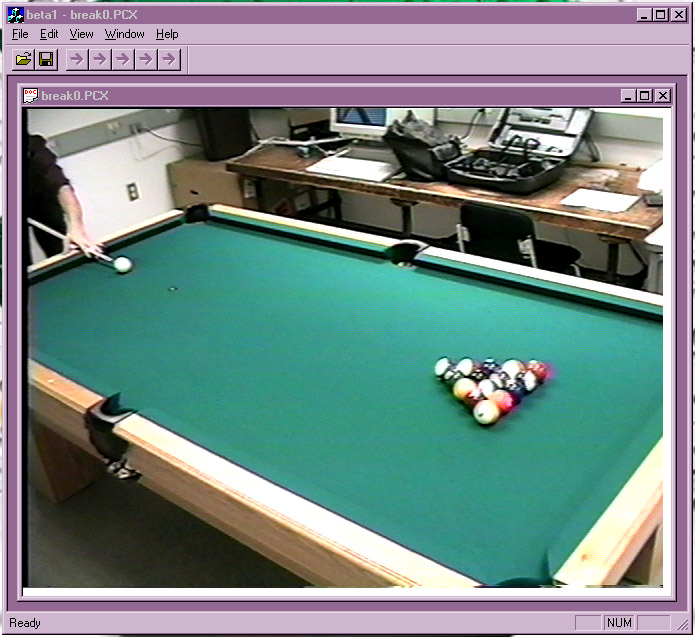

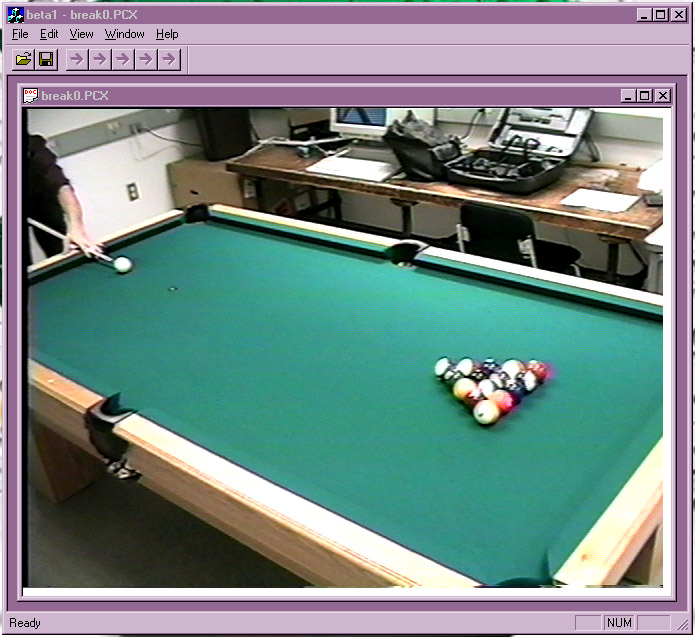

First we’ll take a look at the interface. The major goal this term for the interface was to develop a working test interface for our code. As of right now we are 90% there. We have a Windows, multiple document, application created and we can load any image that can be translated to our image class (currently only PCX) and display that image. What is displayed in a child window is a picture generated from an image class. Also we have a toolbar setup with multiple testing buttons that allow the user to open an image. Once an image is open, the user can then, by clicking on one of the transform buttons in the toolbar, send the selected image to any section of code that they have written and the result of their code is then displayed in a new child window. The results that can be displayed now are a new image class being returned to the interface. Currently the test application can support multiple documents being opened at once. You can apply a transformation on any open image. The source for the transformation is whatever source image child window is currently selected. As this paper is being typed, there is no code in the test application for any transformations. The buttons in the toolbar exist and all that has to be done to add transformations is the appropriate transform button event handler to call the transformation function. Figure 1 shows what the application looks like when one source image is selected. If you notice in the figure that the transform buttons (the arrows) are grayed  out because there are currently no transform function installed in the application.

out because there are currently no transform function installed in the application.

We will now show a few examples of the code used to implement the test application. First if the OnDraw function in our application that is called whenever Windows determines that our application needs to have the window refreshed (or redrawn).

void CBeta1View::OnDraw(CDC* pDC) {

int nImgHeight, nImgWidth, nImgPixels, nColorDepth,i;

BYTE *pBuffer;

CBeta1Doc *pDoc = GetDocument();

if(pDoc->m_pimgSource == NULL) return;

nImgHeight = pDoc->m_pimgSource->getHeight();

nImgWidth = pDoc->m_pimgSource->getWidth();

nImgPixels = nImgHeight * nImgWidth;

nColorDepth = pDoc->m_pimgSource->getColorDepth();

pBuffer = new BYTE[nImgPixels * nColorDepth];

pDoc->m_pimgSource->copyImageData(pBuffer);

for(i = 0; i < nImgPixels * nColorDepth; i +=3) {

int x = ((i) % (nImgWidth * nColorDepth))/3;

int y = (i) / (nImgWidth * nColorDepth);

COLORREF nPixelColor = 0;

nPixelColor = RGB(pBuffer[i], pBuffer[i+1], pBuffer[i+2]);

//TRACE("Colorref: %ld\n",nPixelColor);

pDC->SetPixelV(x, y, nPixelColor);

}

delete pBuffer;

}

What this function does takes three bytes in a row from our image class, and these three bytes are the color values for one pixel. Because we know the height and width of the image, we can calculate the absolute location on the screen of the color information we just read in. We convert these three bytes of color information into a Microsoft defined COLORREF and then set the pixel value at that point of the device context passed to this function. This is done every time there is a screen redraw.

There are more steps that have to be taken to complete the last 10% of the test interface. These steps include, but are not limited to, adding the test functions into the application. This part of the application is dependent upon other groups. For example, one of the sample transformations could be edge detection on an image. By opening any source image and clicking on one of the transform buttons an image would appear with the edges detected. Second we would like to add a timing function to the test application. This function would display the time taken for a certain transformation to take place. When you click on a transformation button, a timer would start and when the test application receives an image back from the transformation function, the timer would stop. This time would then be displayed on the status bar. By having the ability to time this transformation function the user can compare and contrast different methods of a certain transformation. The final change to the application would be an optimization. Currently there is a large lag when you resize a window or move a window. This is due to the method of drawing the information to the child window. Currently the data is being written from the image class to the child window directly. This consumes time. What we propose to change is to have the image class write the information to memory, and then when a window needs to be painted, the bits are just directly copied from memory. This double buffering will greatly increase the performance of this application.

There have been many hurdles in this interface that we’ve had to overcome, and still more that we have to overcome. One of the biggest problems we’ve had is getting Microsoft Visual Studio to do what we want. This is the only reason why the memory theory presented earlier hasn’t been implemented. Currently we have a single window application that can do what we want, in that it can copy information in memory directly to the screen. We are running into problems porting this small application to the multi-windowed environment. Now we’ll take a look at the vision section of the project.

After getting edge detection to work efficiently in A term, the next step towards getting CUE to "see" the pool table was to implement detection of basic geometric shapes, such as lines and circles. Line detection was intended to find the outlines of the pool table, which would allow the computer to convert between image coordinates and model coordinates. Circle detection would then search the area of the image inside the pool table for circles, which would most likely be balls. The color of the pixels inside the circles would then be used to guess which ball it was and where the center of each ball is, so that the computer could have a model containing of list of ball state objects. Each ball object would have a center X and Y in model coordinates, and the ball color and stripeness in the ball class.

For line detection, the parameterized version of the Hough transform for lines was chosen. The Hough transform was chosen because it seemed to be most common form of line detection, and it was conceptually easy to understand. The parameterized version was chosen because it did not have a problem with finding vertical lines (which have infinite slopes).

The original version of the line detection correctly found lines. It did, however, also find artificial lines, which was expected. Points that happened to lie along an imaginary line voting for this imaginary line cause these lines. The most common example of this was the balls on pre-break shots. This was expected, and was not a serious problem, because the imaginary lines were always significantly shorter than the lines desired, so that only looking for important lines would not create problems.

However, the original version did too much work, and more importantly, took significantly longer than was desired. Instead of finding line segments, it was decided that finding the four most significant lines which formed a pool table would be sufficient. In addition, simultaneously, the code was also being ported over to the Windows NT environment. In the process of doing this, the code no longer worked correctly. After attempting to determine the cause of the problems without success, I am currently attempting to start over from the working version.

Circle detection proved more problematic than line detection. A modified version of Hough circle detection was used. This version took advantage of the fact that all the balls should have roughly the same radius, thereby greatly reducing the number of circles that could pass through a given point. Circle detection currently consistently finds circles only among the noise of the image. This will be worked on over break in order to catch up with the schedule. New classes that were added can be seen in Figure 2. Next we’ll take a look at the conversion from our world coordinates to our model coordinates.

|

Class |

Description |

|

Circle |

Create a circle object, and draw it |

|

CirceList |

Create a list of Circles, add a Circle, and draw all of the Circles |

|

Line |

Create a line object, and draw it |

|

LineList |

Create a list of Lines, add a Line, and draw all of the Lines |

|

MyMath |

Creates look-up tables for sin and cos math calculations |

|

Vision |

Heart of the Vision Portion of CUE Creates a vision object, then processes an image and returns a model Private methods include: FindEdges: produces an image of the edges of a captured images houghLine: produces a LineList containing the N lines found in an edge image circleLine: produces a CircleList containing the N circles found in an edge image

|

There is a problem in just looking at a straight video image from a source. Because of the location we have to use with the camera, the view of the table we have will be very skewed (see picture on front cover). We have to be able convert from this skewed view to a real-life view where the table is a perfect rectangle with a two-to-one ratio of the sides. This is going to be accomplished by a transformation matrix. George has been heading up the team to determine what this transformation matrix is. Using Maple on the WPI network, we were able to avoid doing very ugly matrix calculations. Here we will show part of this matrix class used to create our transformation matrix.

Matrix::Matrix( Point w[3], Point i[3] ) {

this->width = 3;

this->height = 3;

element = new double[9];

double temp;

temp = -i[0].x*i[2].y*i[1].z - i[0].y*i[1].x*i[2].z + i[0].y*i[2].x*i[1].z + i[0].x*i[2].z*i[1].y + i[0].z*i[1].x*i[2].y - i[0].z*i[2].x*i[1].y;

setElement(0,0, (w[2].x*i[0].y*i[1].z - w[2].x*i[0].z*i[1].y + w[1].x*i[0].z*i[2].y - i[1].z*w[0].x*i[2].y - i[2].z*i[0].y*w[1].x + i[2].z*w[0].x*i[1].y)/(temp) );

setElement(0,2, (-i[0].x*i[2].y*w[1].x + i[0].y*i[2].x*w[1].x - i[0].y*i[1].x*w[2].x + w[0].x*i[1].x*i[2].y - w[0].x*i[2].x*i[1].y + i[0].x*w[2].x*i[1].y)/(temp) );

setElement(0,1, - (-i[1].x*w[2].x*i[0].z + i[1].x*i[2].z*w[0].x + w[1].x*i[2].x*i[0].z + w[2].x*i[0].x*i[1].z - i[2].x*i[1].z*w[0].x - i[2].z*i[0].x*w[1].x)/(temp) );

temp = -i[0].x*i[2].y*i[1].z - i[0].y*i[1].x*i[2].y + i[0].y*i[2].x*i[1].z + i[0].x*i[2].y*i[1].y + i[1].z*i[1].x*i[2].y - i[1].z*i[2].x*i[1].y;

setElement(1,1, - (w[0].y*i[1].x*i[2].y - i[1].x*w[2].y*i[1].z - i[0].x*i[2].y*w[1].y + w[1].y*i[2].x*i[1].z + w[2].y*i[0].x*i[1].z - i[2].x*i[1].z*w[0].y)/(temp) );

setElement(1,0, (w[2].y*i[0].y*i[1].z - w[2].y*i[1].z*i[1].y + w[1].y*i[1].z*i[2].y - i[1].z*w[0].y*i[2].y - i[2].y*i[0].y*w[1].y + i[2].y*w[0].y*i[1].y)/(temp) );

setElement(1,2, (-i[0].x*i[2].y*w[1].y + i[0].y*i[2].x*w[1].y - i[0].y*i[1].x*w[2].y + w[0].y*i[1].x*i[2].y - w[0].y*i[2].x*i[1].y + i[0].x*w[2].y*i[1].y)/(temp) );

temp = -i[0].x*i[2].y*i[1].z - i[0].y*i[1].x*i[2].z + i[0].y*i[2].x*i[1].z + i[0].x*i[2].z*i[1].y + i[0].z*i[1].x*i[2].y - i[0].z*i[2].x*i[1].y;

setElement(2,2, (-i[0].y*i[1].x*w[2].z + i[0].y*w[1].z*i[2].x + i[0].x*w[2].z*i[1].y + w[0].z*i[1].x*i[2].y - w[0].z*i[2].x*i[1].y - i[0].x*w[1].z*i[2].y)/(temp) );

setElement(2,1, - (-i[1].x*w[2].z*i[0].z + i[1].x*i[2].z*w[0].z + w[2].y*i[0].x*i[1].z - i[2].x*i[1].z*w[0].z + i[2].x*w[1].z*i[0].z - i[2].z*i[0].x*w[1].z)/(temp) );

setElement(2,0, (-w[1].z*i[0].y*i[2].z + w[1].z*i[0].z*i[2].y - w[2].z*i[0].z*i[1].y + i[1].y*i[2].z*w[0].z + w[2].z*i[0].y*i[1].z - i[1].z*w[0].z*i[2].y)/(temp) );

}

These ugly formulas will create the transformation matrix we need. This matrix class can be seen in its entirety at the end of this paper.

During B term we finalized the design for the classes representing the model of the world. These were also implemented with basic functionality. The classes consist of the Ball, BallState and Table classes.

The Ball class describes the standard set of balls used with various pool games. The 1 through 15 ball and the cue ball are included in this set. The approximate color of each ball as well as a sample image of each ball can be returned upon request. A ball’s label (what it is called) and whether or not the ball is a stripe is also provided. The labels are strings and are defined in Ball to ease use. The user cannot change this information yet. In the next couple of days the user will be allowed to supply his/her own sample image or color value for a particular ball. This would be useful if the current settings did not work well for a particular setup of CUE.

The BallState class is a simple object that holds a particular ball’s position on the table and how much the ball is moving on the table. BallState also keeps track of whether the ball is on the table. If it is not, position and movement are not available. User can change the position, movement and on/off table information.

The Table class is based on the Ball class, so a Table has all the information about a particular set of balls. An array of BallStates is kept for the balls used in play. The methods available in the BallState class are mimicked in Table with the addition of requiring the label of the ball of interest. The Table class is the only class users need be concerned with. The method in Ball that returns a list of labels for the ball set information is available for, is overridden to just return the balls in play. A time stamp for this object will be implemented later.

I also took the task of writing a function to identify a particular ball from a clipped image of a ball. This is very near completion and requires a bit more testing. This function will end up in the Vision section of the project that Carleton is heading up. Currently the function takes a weighted average of the pixel values in the provided image and compares that average with the available color values provided by a Ball object. It is not tested for striped balls yet.

Before C term starts, the classes and functions mentioned will be fully implemented and tested. During C term I will be working on creating a graphical representation of a Table object for the user interface. This will allow users to click on balls to call a shot. Final designs for the CUE interface will also be finalized as well as implemented to handle user input. Algorithms for critiquing shots will also be explored. This will involve the development of the analysis section of the project that uses a vision object to obtain state information from the incoming image data. This involves deciding which frames to actually look at to identify events. Once all the events in a particular shot are found, analysis of the shot can take place.

List of Classes and Methods Implemented so far:

Ball class:

Ball(); // constructor

virtual ~Ball(); // destructor

int IsStripe(char *); // returns 1 if specified ball is a stripe, 0 otherwise

BallState * State(char *); // returns a state object for the specified ball

Image * SampleOf(char *); // returns a sample image of the ball

// requested

COLOR ColorOf(char *); // returns the color of the ball with specified

// if ball unknown, returns NULL

BallState class:

BallState(); // constructor

BallState(char *); // constructor - sets label

virtual ~BallState(); // destructor

char * GetLabel(void); // returns label of ball

// sets <name of function> data member

// setting x or y marks ball as on table when set

void SetX(float);

void SetY(float);

void SetMoveX(float);

void SetMoveY(float);

// returns <name of function> data member

// if ball not on table, return NULL

float GetX(void);

float GetY(void);

float GetMoveX(void);

float GetMoveY(void);

int OffTable(void); // returns 1 if ball is not on table

// returns 0 if ball on table

void SetOffTable(void); // marks the ball as being off the table

Table class:

Table(); // constructor

virtual ~Table(); // destructor

// all methods return NULL or -1 if requested ball is not in use

// all methods return 1 for success if int specified for return value

BallState * State(char *); // returns the current state of the ball

// requested

// sets same as BallState class

int SetX(char *, float);

int SetY(char *, float);

int SetMoveX(char *, float);

int SetMoveY(char *, float);

// marks specified ball as being off the table

int SetOffTable(char *);

// returns same as BallState class

float GetX(char *);

float GetY(char *);

float GetMoveX(char *);

float GetMoveY(char *);

int OffTable(char *); // returns 1 if ball is not on table

// returns 0 if ball on table

These three sections were the focus of this term’s work. Although there are heads to certain sections, everyone contributed to the advancement of each section. We have a long road ahead of us, but all of us are looking forward to creating an excellent software application, and learning a lot along the way. We also look forward to a small addendum to our group for the month of January.

Early next term we hope to finalize the test application which entails completing the above mentioned tasks. After the test application is completed we will begin development of the final GUI that this test application is paving the way for. Many of the problems we have had in developing this test application have been overcome, and therefore when we go to complete the same tasks in the final GUI, we won’t run into these problems and delays. We would also like to have the ability to display a model of the table. Ideally we would be beginning the implementation of the analysis of pool games before the end of C-Term.

Bibliography

1. Haralick, Robert & Linda Shapiro, Computer And Robot Vision, Addison-Wesley Publishing Company Inc., Reading, 1992.

2. Gonzales, Rafael & Richard Woods, Digital Image Processing, Addison-Wesley Publishing Company Inc., Reading, 1992.

3. Kruglinski, David J., Inside Visual C++ Fourth Edition, Microsoft Press, Redmond, 1997.